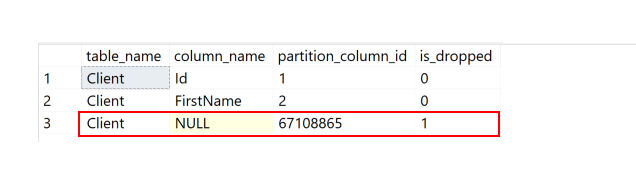

In my last article - Identify Tables With Dropped Columns

- we saw how we can identify tables that have columns that were dropped.

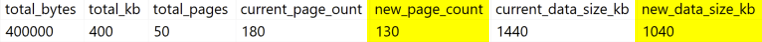

Today, we are going to check a way to approximately calculate how much space we can expect to recover if we rebuild our table.

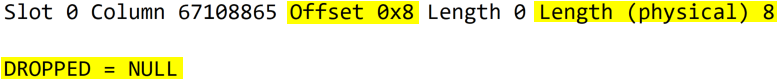

If you want to read the 1st article I published on this subject, you can read it here - What happens when we drop a column on a SQL Server table? Where’s my space?